AssignmentGPT Blogs

OpenAI unveiled the GPT-4 Omni (GPT-4o) at its Spring Update in San Francisco on Monday morning. Chief Technology Officer Mira Murati and OpenAI staff unveiled their latest flagship model, which is capable of real-time conversations with a friendly AI chatbot that talks in a realistic way like a human. This advancement represents a significant evolution in AI content creation tools technology.

“GPT-4O provides different levels of GPT-4 intelligence but much faster,” Murati said at the conference. “We think GPT-4o really changes that paradigm towards the future of collaboration, where these connections are more natural and fully accessible.”

What is ChatGPT 4o?

GPT-4o is a type of AI that can understand and provide information in a variety of ways. It can see images, hear words, read words, and use all this information to make something new about it. For example, it can take a verbatim story and turn it into a picture, or it can listen to a song and write a melody to it.

How Does GPT-4o Work?

Details of how GPT-4o works are still scarce. The only information OpenAI gave in its announcement was that GPT-4o is a neural interface trained on information, vision and audio input.

This new approach differs from previous methods in that different models are trained on different data.

However, GPT-4o is not the first multimodal model. In 2022, TenCent Lab developed a SkillNet prototype that combined LLM transformer products with computer vision techniques to improve the ability to recognize Chinese characters

In 2023, a team from ETH Zurich, MIT, and Stanford University refined WhisBERT, a series of large language models. Although not the first, the GPT-4o is more ambitious and powerful than these previous attempts.

What Can GPT-4o Do?

GPT-4o has many potential applications. Here are a few:

- Learning : Translating and explaining words in different languages can help people learn a new language. Combined with other AI writers for content improvement, it creates powerful educational experiences.

- Helping people : It can help by translating text into sentences or speech for those who have difficulty seeing or hearing.

- Creativity : Understanding and mixing different inputs can be music or art, which is another way for people to express their creativity.

- Customer Support : Customer Support : With its ability to understand multiple languages and respond quickly, GPT-4o can provide better customer service. This capability makes it competitive with other free AI text generator tools in the business communication space.

The Impact of GPT-4o

The creation of GPT-4o was a huge step forward in AI. It allows AI to better understand logic and responses similar to how humans speak. It also breaks down language barriers, making technology accessible to more people. Its speed and real-time capabilities could transform many industries, such as telephony, customer support and online learning. Understanding the benefits of using AI writing tools helps businesses evaluate GPT-4o's potential impact.

Model Capabilities

Before GPT-4o, you could use Voice Mode to talk to ChatGPT with an average of 2.8 seconds (GPT-3.5) and 5.4 seconds (GPT-4). These performance improvements demonstrate why GPT-4o stands out among best AI writing generators in terms of response time.

To this end, Voice Mode is a pipeline with three distinct models: a simple model writes audio to text, takes GPT-3.5 or GPT-4 text and outputs text, a model that produces easily third convert that information back into audio This process shows that the main source of intelligence is GPT-. 4 She loses a lot of information-she can’t look at direct voices, multiple speakers, or background noise, and she can’t smile, sing, or express emotions.

Model Evaluations

Measured by traditional parameters, the GPT-4o achieves GPT-4 turbo-level performance in terms of texture, logic and code intelligence, setting a new high-water mark in a wide range of audiovisual languages.

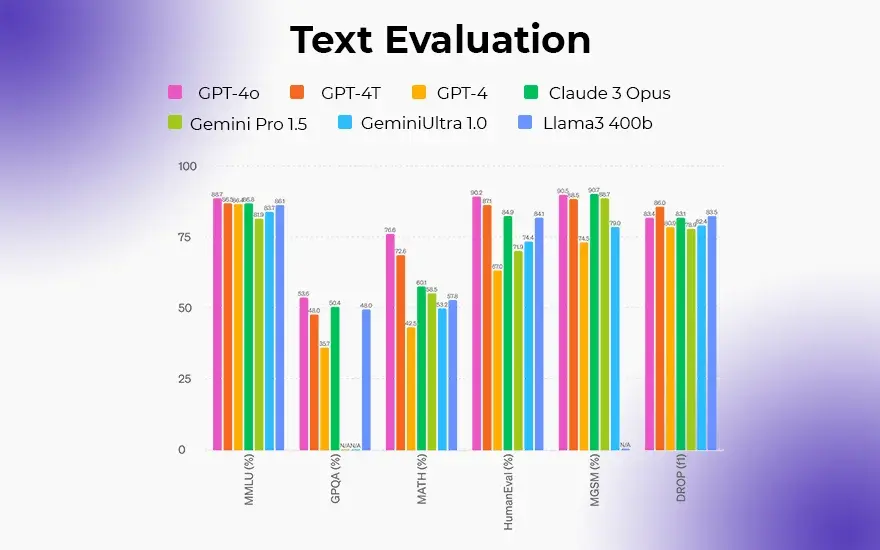

Text Evaluation

The GPT-4o sets a new high score of 88.7% on the 0-shot COT MMLU (General Knowledge Questions). This performance level makes it particularly effective among AI tools that can think and write like humans. All these evals were compiled with our new simple evals(opens in new window) library. Additionally, the GPT-4o sets a new high score of 87.2% over a traditional 5-shot no-coat MMLU. (Note: Llama3 400b(opens in new window) still tearing).

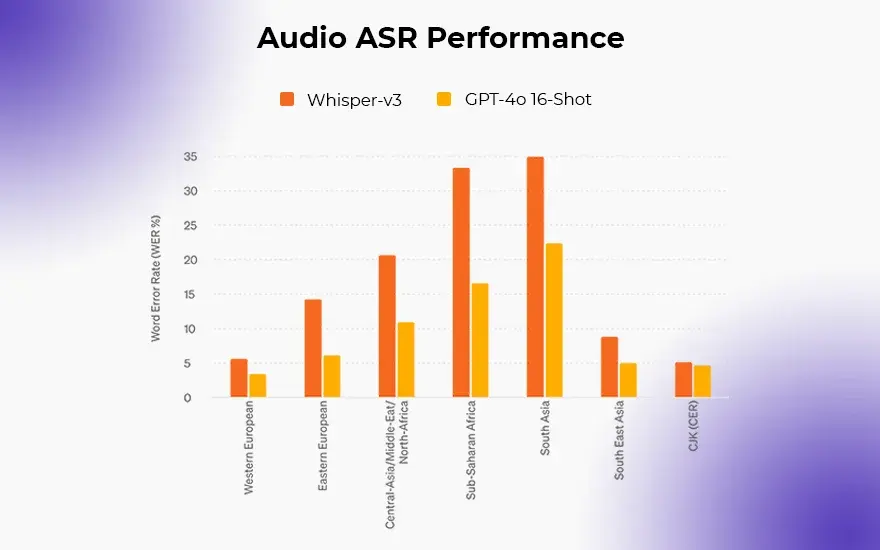

Audio ASR Performance

GPT-4o significantly improves speech recognition over Whisper-v3 in all languages, especially for sparse languages.

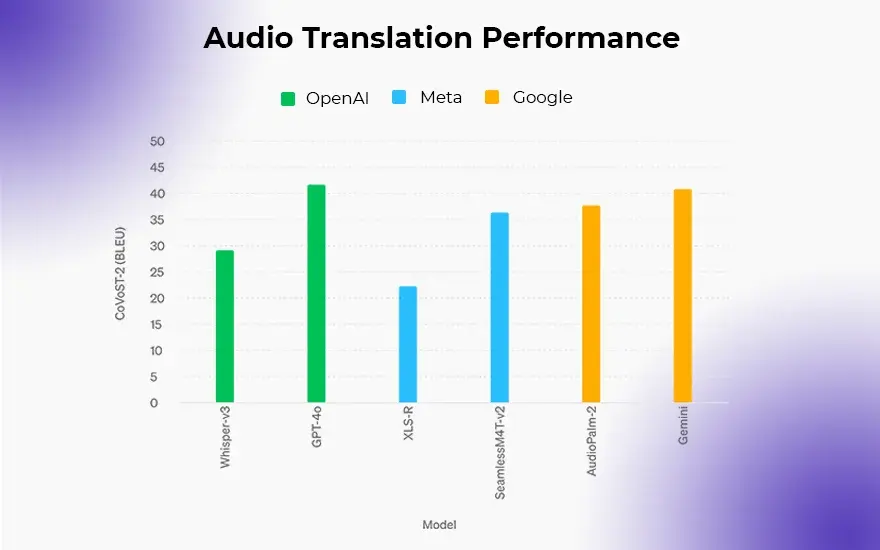

Audio Translation Performance

GPT-4o sets new milestones when it comes to speech translation and outperforms Whisper-v3 on the MLS benchmark.

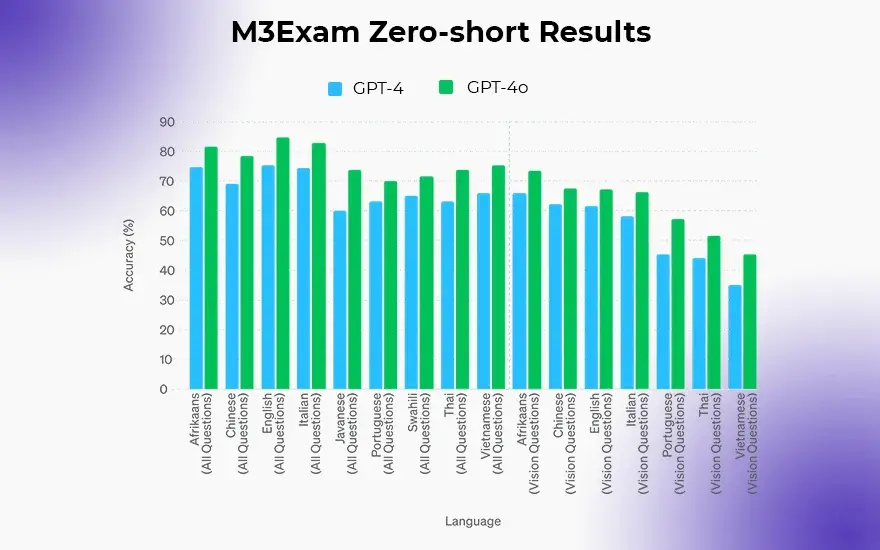

M3Exam Zero-short Results

The M3Exam structure consists of a multilingual and visual assessment, with multiple choice questions from standardized tests from other countries that sometimes include reasoning and mathematics GPT-4o is more difficult than GPT-4 on these dimensions in all languages. (We exclude vision results from Swahili and Javanese, as there are 5 or fewer vision questions for these languages.

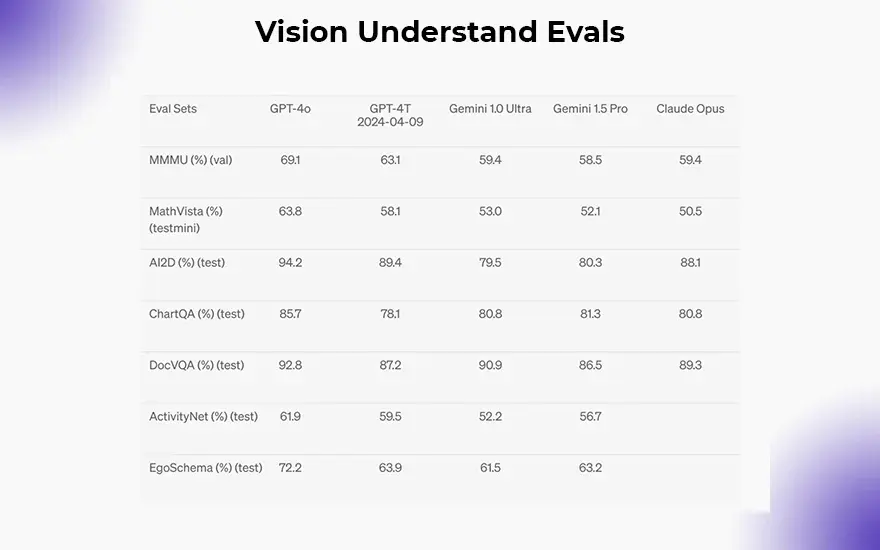

Vision Understand Evals

The GPT-4o achieves state-of-the-art performance in visual sensitivity parameters. All vision evals are 0-shot, and MMMU, MathVista, and ChartQA are 0-shot CoT.

Language Tokenization

These 20 languages are selected as representatives that are compressed into different language families with the new tokenizer.

| Languages | Examples |

|---|---|

| Gujarati 4.4x fewer tokens (from 145 to 33) | હેલો, મારું નામ જીપીટી-4o છે. હું એક નવા પ્રકારનું ભાષા મોડલ છું. તમને મળીને સારું લાગ્યું! |

| Telugu 3.5x fewer tokens (from 159 to 45) | నమస్కారము, నా పేరు జీపీటీ-4o. నేను ఒక్క కొత్త రకమైన భాషా మోడల్ ని. మిమ్మల్ని కలిసినందుకు సంతోషం! |

| Tamil 3.3x fewer tokens (from 116 to 35) | வணக்கம், என் பெயர் ஜிபிடி-4o. நான் ஒரு புதிய வகை மொழி மாடல். உங்களை சந்தித்ததில் மகிழ்ச்சி! |

| Marathi 2.9x fewer tokens (from 96 to 33) | नमस्कार, माझे नाव जीपीटी-4o आहे| मी एक नवीन प्रकारची भाषा मॉडेल आहे| तुम्हाला भेटून आनंद झाला! |

| Hindi 2.9x fewer tokens (from 90 to 31) | नमस्ते, मेरा नाम जीपीटी-4o है। मैं एक नए प्रकार का भाषा मॉडल हूँ। आपसे मिलकर अच्छा लगा! |

| Urdu 2.5x fewer tokens (from 82 to 33) | ہیلو، میرا نام جی پی ٹی-4o ہے۔ میں ایک نئے قسم کا زبان ماڈل ہوں، آپ سے مل کر اچھا لگا! |

| Arabic 2.0x fewer tokens (from 53 to 26) | مرحبًا، اسمي جي بي تي-4o. أنا نوع جديد من نموذج اللغة، سررت بلقائك! |

| Persian 1.9x fewer tokens (from 61 to 32) | سلام، اسم من جی پی تی-۴او است. من یک نوع جدیدی از مدل زبانی هستم، از ملاقات شما خوشبختم! |

| Russian 1.7x fewer tokens (from 39 to 23) | Привет, меня зовут GPT-4o. Я — новая языковая модель, приятно познакомиться! |

| Korean 1.7x fewer tokens (from 45 to 27) | 안녕하세요, 제 이름은 GPT-4o입니다. 저는 새로운 유형의 언어 모델입니다, 만나서 반갑습니다! |

| Vietnamese 1.5x fewer tokens (from 46 to 30) | Xin chào, tên tôi là GPT-4o. Tôi là một loại mô hình ngôn ngữ mới, rất vui được gặp bạn! |

| Chinese 1.4x fewer tokens (from 34 to 24) | 你好,我的名字是GPT-4o。我是一种新型的语言模型,很高兴见到你! |

| Japanese 1.4x fewer tokens (from 37 to 26) | こんにちは、私の名前はGPT-4oです。私は新しいタイプの言語モデルです。初めまして! |

| Turkish 1.3x fewer tokens (from 39 to 30) | Merhaba, benim adım GPT-4o. Ben yeni bir dil modeli türüyüm, tanıştığımıza memnun oldum! |

| Italian 1.2x fewer tokens (from 34 to 28) | Ciao, mi chiamo GPT-4o. Sono un nuovo tipo di modello linguistico, piacere di conoscerti! |

| German 1.2x fewer tokens (from 34 to 29) | Hallo, mein Name is GPT-4o. Ich bin ein neues KI-Sprachmodell. Es ist schön, dich kennenzulernen. |

| Spanish 1.1x fewer tokens (from 29 to 26) | Hola, me llamo GPT-4o. Soy un nuevo tipo de modelo de lenguaje, ¡es un placer conocerte! |

| Portuguese 1.1x fewer tokens (from 30 to 27) | Olá, meu nome é GPT-4o. Sou um novo tipo de modelo de linguagem, é um prazer conhecê-lo! |

| French 1.1x fewer tokens (from 31 to 28) | Bonjour, je m'appelle GPT-4o. Je suis un nouveau type de modèle de langage, c'est un plaisir de vous rencontrer! |

| English 1.1x fewer tokens (from 27 to 24) | Hello, my name is GPT-4o. I'm a new type of language model, it's nice to meet you! |

Model Safety and Limitations

Through strategies together with filtering the training information in GPT-4o and adjusting the conduct of the model via publish-training, safety is incorporated into the strategies with the aid of layout We additionally evolved extra protection measures to provide guardrails on voice outputs at the snow·

We tested the GPT-4o according with our collection plan and consistent with our voluntary dedication. Our evaluation of cybersecurity, CBRN, manipulate, and most suitable autonomy shows that GPT-4o does now not rating above slight hazard in any of those classes. This analysis allowed for automated human reviews throughout the model schooling method. We tested versions of the version with both pre- and publish-mitigation safety, the usage of trendy preservation reports, to optimize version power.

Model Availability

GPT-4o is our today's step in pushing the bounds of deep studying, this time to sensible packages. We’ve spent a variety of hard work over the past couple of years running to improve performance at every stage of the stack. As a primary result of this take a look at, we're able to generalize the GPT-4 level model The GPT-4o functionality could be rolled out more frequently (with accelerated crimson crew get entry to starting these days).

GPT-4o’s textual content and snap shots abilities are starting to arrive on ChatGPT these days. We make GPT-4o to be had on the free stage, and for Plus customers with as much as 5x message limits. We could be releasing new Voice Modes for GPT-4o and Alpha in ChatGPT Plus inside the coming weeks.

Developers can now additionally get admission to GPT-4o as a textual content-view model inside the API· GPT-4o is 2x quicker, 1/2 the charge, and has a 5x higher charge restrict in comparison to GPT-4 Turbo. We plan to release help for new GPT-4o audio and video talents for a small institution of relied on companions in the API within the coming weeks.

Advance Voice Processing

GPT-4o offers superior functions together with actual-time communique and correct speech synchronization. This method it’s capable of communicate with users immediately and the use of herbal and fluid voices. These functions enhance the consumer experience, making interactions with AI seamless and fun.

GPT-4o: Free or Paid?

The GPT-4o version is freely to be had to all customers, subject to sure processing electricity barriers Paid users could have get entry to to five times the capacity of loose users. This technique allows OpenAI to make the technology greater broadly to be had and supply folks that want it elevated abilities.

Thinking About the Future

With technology as effective as GPT-4o, it's essential to use it carefully and responsibly. OpenAI is aware about this and is running to ensure that GPT-4o is secure and does no longer create or spread fake records or undue bias. Users should consider the pros and cons of using AI writing tools when implementing GPT-4o in their workflows.

Conclusion

The creation of OpenAI’s GPT-4 Omni represents a substantial leap closer to synthetic intelligence. With its remarkable actual-time sensing and responsive abilities, GPT-4o guarantees to transform industries and empower users on a international scale. By prioritizing safety, accessibility and innovation, OpenAI is paving the way for a destiny wherein AI enhances human revel in and interplay.

FAQs

1. Is GPT-4o available at no cost?

2. How does GPT-4o make sure protection and mitigate risks?

3. What are some applications of GPT-4o?

4. How fast is GPT-4o in comparison to previous fashions?

5. Can developers get admission to GPT-4o for integration into their packages?

Content writer at @AssignmentGPT

Rashi Vashisth is a content writer who helps brands put their thoughts into words. She creates blogs, website content, and brand stories that are easy to understand and feel genuine. Her writing style focuses on keeping things clear and making sure the message connects with the right people.

Master AI with

AssignmentGPT!

Get exclusive access to insider AI stories, tips and tricks. Sign up to the newsletter and be in the know!

Transform Your Studies with the Power of AssignmentGPT

Empower your academic pursuits with tools to enhance your learning speed and optimize your productivity, enabling you to excel in your studies with greater ease.

Start Your Free Trial ➤Start your success story with Assignment GPT! 🌟 Let's soar! 🚀

Step into the future of writing with our AI-powered platform. Start your free trial today and revolutionize your productivity, saving over 20 hours weekly.

Try For FREE ➤