AssignmentGPT Blogs

If you say that you have to listen to the sound of a movie which has no visuals and no subtitles, you have to try to understand the movie only from the sound. This feels quite an incomplete format or too boring a task, right? And traditional AIs earlier also used to create incomplete work like this, when they had to work on only one type of data, but today AI models have become so advanced that they can see, listen and can even read and speak, not all AI but mostly AI has these capabilities and this is what is called multimodal AI.

Multimodal AI is transforming how machines understand and interact with humans and the world. It allows AI systems to process multiple types of data simultaneously and create sense in different data formats, whether the data is in the format of text, images, audio, or video. Today, in this detailed guide, we will try to understand multimodal AI in detail, where we will know how it works, and why it matters so much today and its exact real-world applications, current trends and what will be its future directions.

Quick Summary

Multimodal AI processes and integrates multiple data types (modalities), which include text, audio, images, and video formats. Basically, it includes input modules for each data, a fusion module to combine all the data, and an output module for decision making and content generation.

Although Multimodal has many benefits, its core benefits are higher accuracy, greater creative capabilities, cross-domain learning, and human-rich quality interactions. Multimodal has as many challenges as it has benefits, including data alignment, representation, ethical issues, and high data requirements. Multimodals are used for everything from healthcare to robotics and also for marketing or disaster management.

What is Multimodal AI?

Multimodal AI is a type of artificial intelligence systems that integrate and processes information from multiple modalities. These modalities can include text, audio, video, images, and even sensor data. For example, autonomous care, manufacturing systems rely heavily on senior data for their actions. Unlike unimodal AI, which processes only one type of input, multimodal AI combines and works with different data forms to get a richer understanding of context.

AI-powered virtual assistants are the best examples of multimodal, which can listen to your commands, recognize your facial expressions from video, and give you output based on your input. Multimodal tools or software provide a more natural and intuitive interaction experience.

How Does Multimodal AI Work?

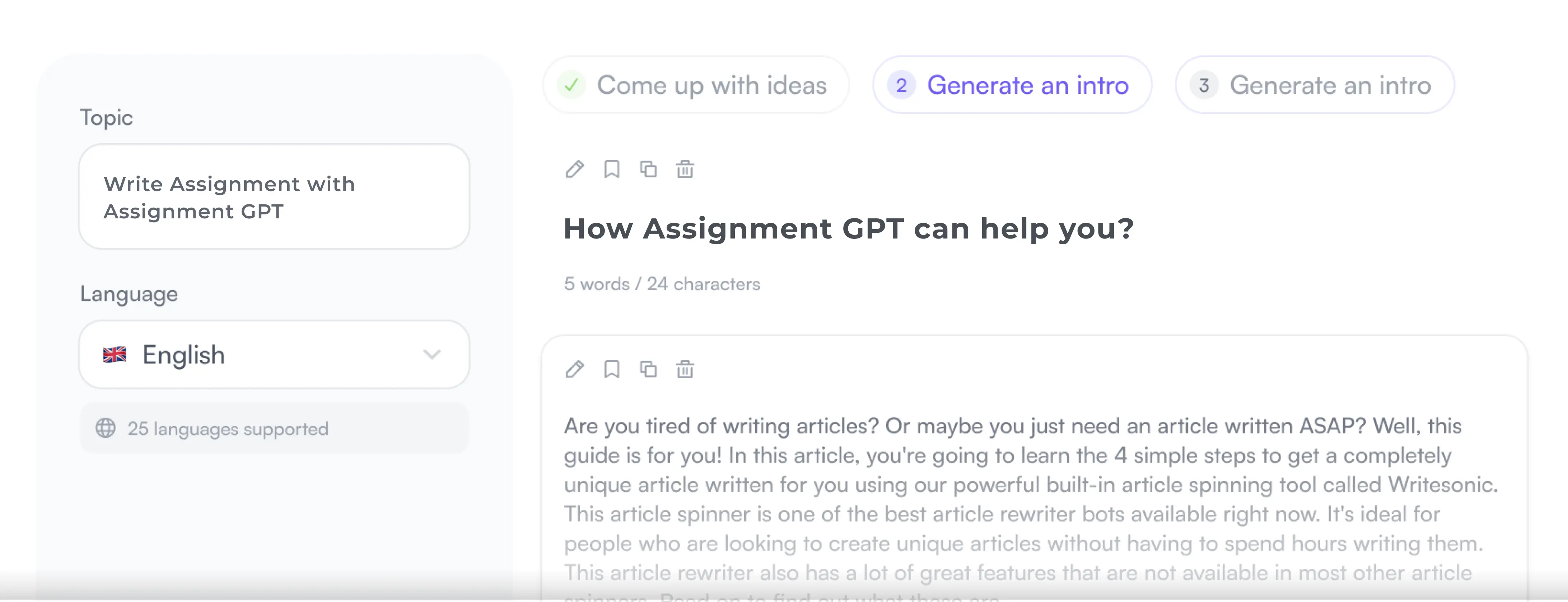

Nowadays, many new Multimodal AIs are being released in the industry, and all of them can have different components and work, but the popular and main Multimodal AIs in the industry work on three main components. Let's know a little more about those components in detail.

1. Input Module: In this process, it handles different data types like CNNs for images and RNNs or transformers for text and audio with neural networks and tries to understand them.

2. Fusion Module: It aligns or combines with any data using methods like early fusion, mid-level fusion, or late fusion to create a unified representation. This helps the Multimodal to understand different information together.

3. Output Module: It generates the final output based on the combined inputs in the final process, which may include decisions, predictions, or creative content.

The Multimodal architecture gives AI the capabilities to analyze complex data, makes it easy to maintain data relationships, and also tries to keep the results context-aware and highly accurate.

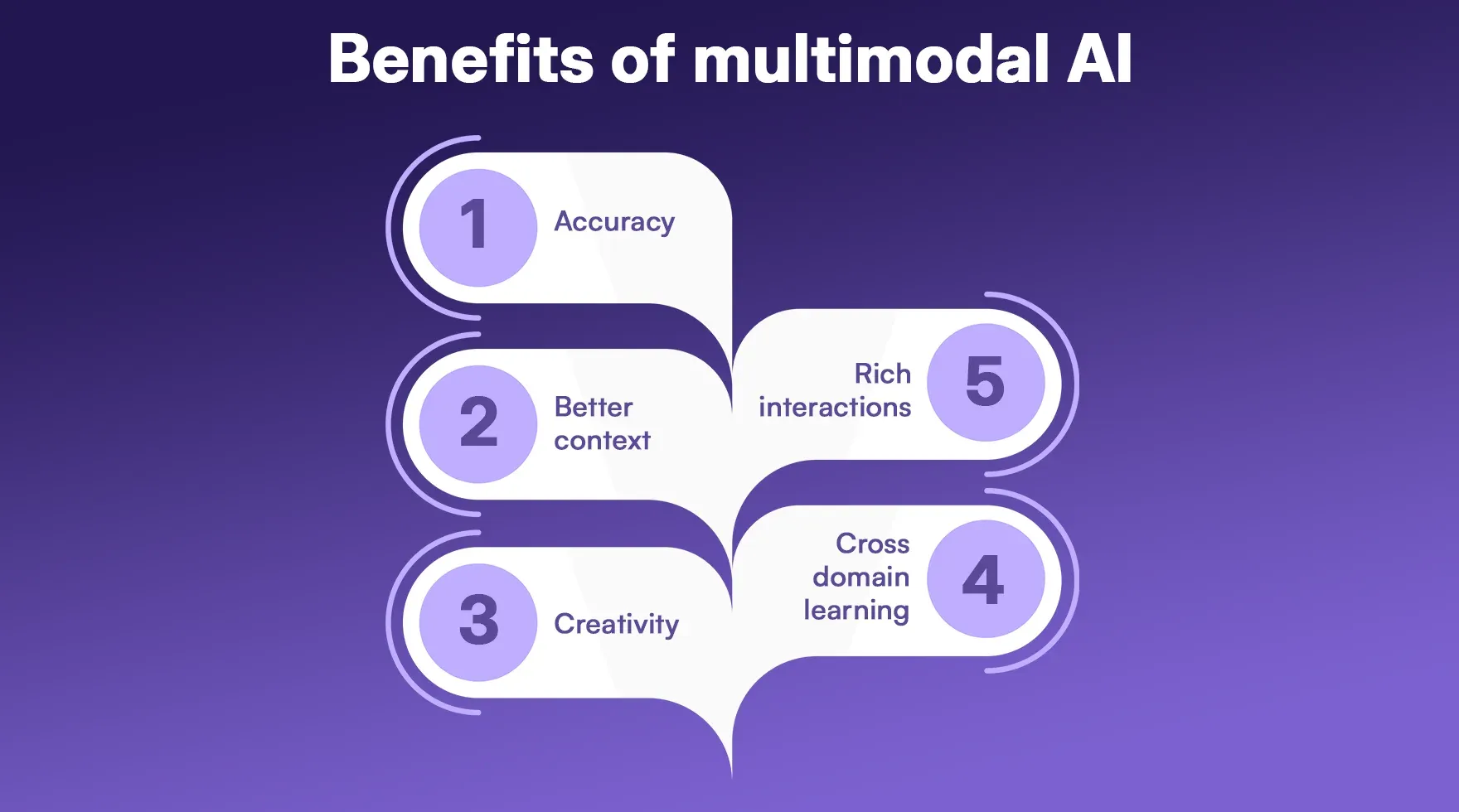

Benefits of Multimodal AI

The Multimodal AI currently available in the industry is quite useful for different tasks. Let's try to understand the core benefits in detail.

1. Accuracy: Mostly Multimodal uses multiple data types, and multimodal AI gets these capabilities, which enable it to make more precise predictions and decisions due to its available large datasets.

2. Better Context: Understanding images and audio along with text was almost impossible for unimodal, but it is quite easy for multimodal, and it understands the input contextually very well and tries to give the output while being aware of the input.

3. Creativity: You must have heard about DALL-E and Runway Gen-2 models, these models also use Multimodal AI to understand any text prompts and generate art and videos accordingly, through this, we can imagine the creative potential of AI.

4. Cross-Domain Learning: Being multimodal, it can use the skills learned in one modality in another modality, and due to cross-domain integration, it works quite perfectly in all sectors.

5. Rich Interactions: Multimodal AI focuses heavily on user experience and tries to provide human-like interactions to enhance the user experience through their output, where it can give output in voice, text, gestures, and even in visual format.

Also read this article : Google Search vs Perplexity AI

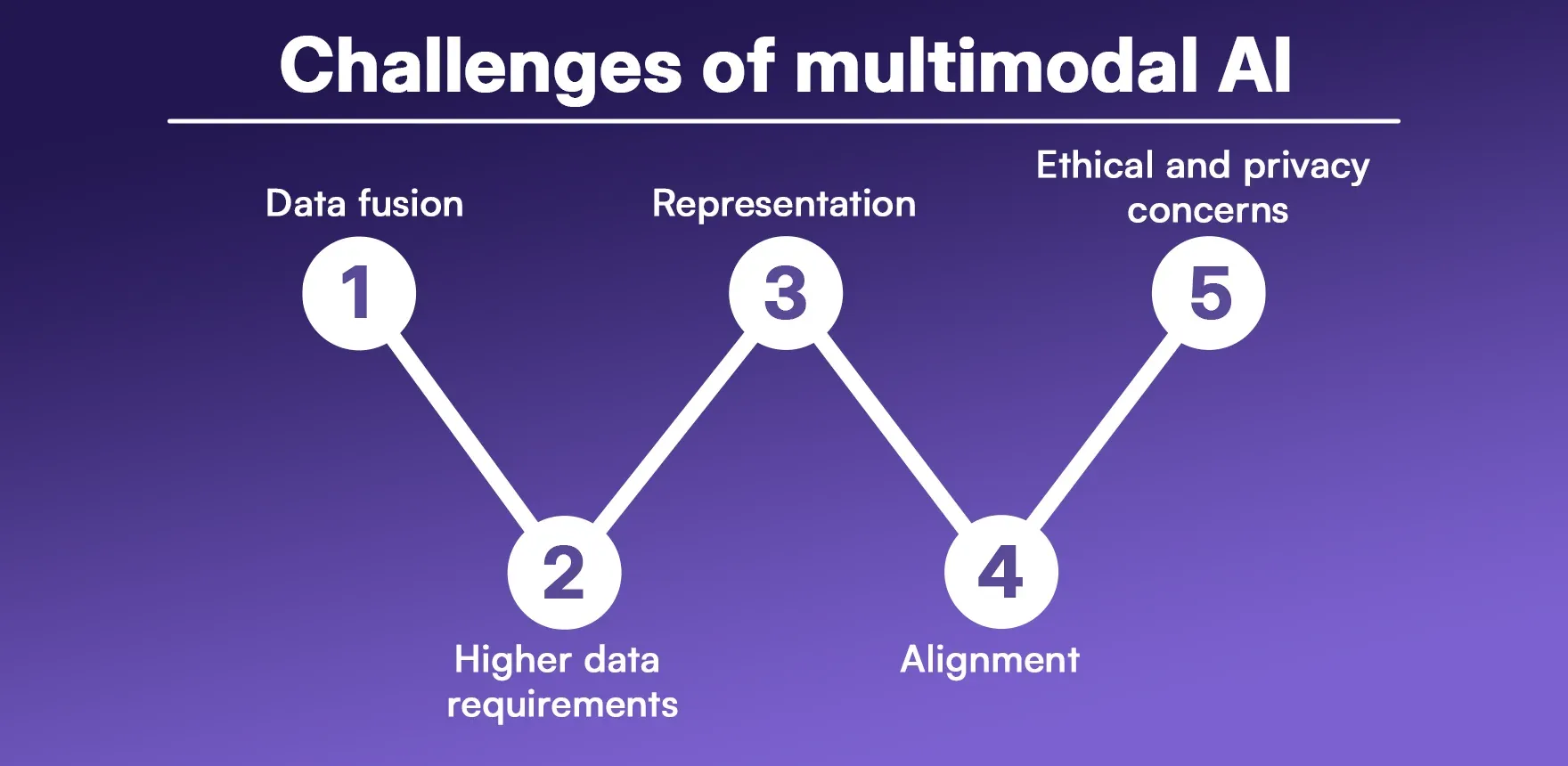

Challenges of Multimodal AI

Although Multimodal AI falls in the category of quite advanced technology, it is still evolving, and most of the systems are not updated accordingly. Due to this, it may have to face many challenges. Let us discuss them.

1. Data Fusion: Integrating diverse data sources together and analyzing them, and giving output in different formats is quite complex, and sometimes there can be errors in quality and final output.

2. Higher Data Requirements: Multimodal systems require large volumes of high-quality, labelled data for training. For this, Multimodal systems store the data of human interaction and try to learn from it, and it can also take a lot of time to make any Multimodal AI or a platform perfect.

3. Representation: It is very difficult to create a unified representation from noisy or missing datasets, and analyzing such data from all modalities can sometimes fluctuate the final output results.

4. Alignment: Synchronizing data in time and space (e.g., matching lip movement with speech), such tasks are still tough for some models, and it has been seen that Multimodal sometimes gives poor results when producing non-English output.

5. Ethical and Privacy Concerns: Using sensitive multimodal data (e.g., video surveillance, voice commands) raises many issues including whether there is an output bias of the multimodal, whether the interaction in which the data is being used requires user consent or not before storing by these Ai platforms or how it protects the user data.

Use Cases of Multimodal AI

Multimodal AI is already being used in many sectors, it be in creative tasks or more important sectors. Let's learn about the important use cases of Multimodal AI and its sectors.

Healthcare: Multimodal AI is used quite effectively in the healthcare sector, wherein these models try to conclude an exact report by combining different images of MRI scans. And many models even use patient records or genetic data to diagnose exact problems.

Robotics: It is also being used a lot in the robotics sector, where it has been seen that it helps robots to perceive data and act according to the environment, in which multimodal helps in tasks like listening to audio, seeing external objects and taking action according to it.

Language Processing: It is used a lot in analyzing the effectiveness of sentiment, in which facial expressions and vocal tone and text data are considered.

AR/VR: It is also enhancing immersive experiences, in which it uses gesture, voice, and spatial understanding.

Marketing: Multimodals are also quite expert in analyzing social media content (text + images) as they can easily detect trends and show personalized ads to users accordingly, and this also gives good engagement to ads because the ad campaign is quite targeted.

Customer Support: AI chatbots are being used in almost all major industries, and they can answer the AI users in text, voice, and image format, and this has been possible only due to Multimodal, because without it Chatbots will not be able to understand the user's questions.

Disaster Management: Multimodal systems are also proving to be very impactful in natural disaster management as they use satellite images, sensor data to give advance warning and awareness to people through social media and different networks, which makes it possible to manage and avoid casualties.

Read More : Branches of Artificial Intelligence You Should Know

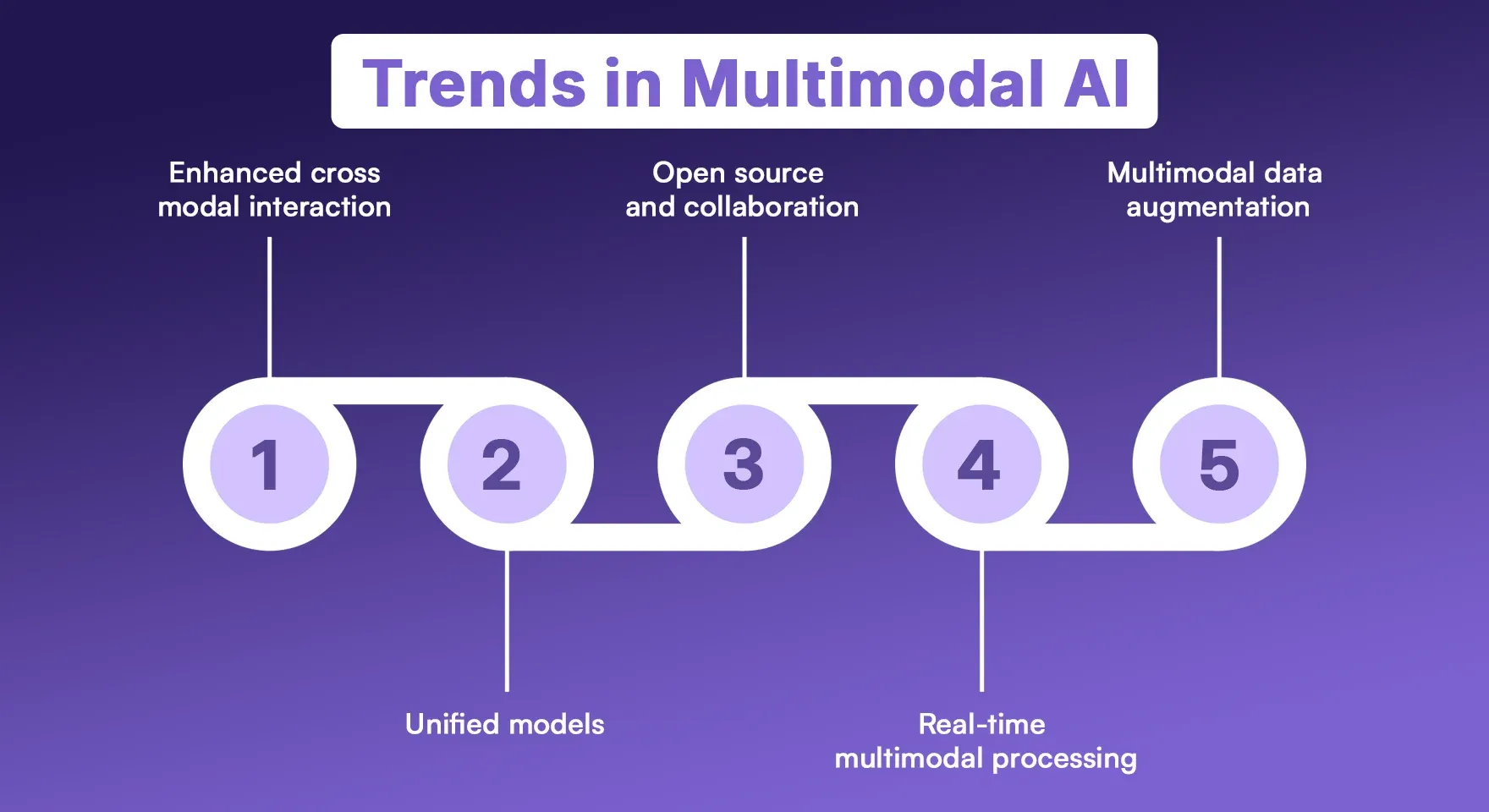

Trends in Multimodal AI

Let us try to understand the complete story of Multimodal AI from the trends of the last few years, what developments are happening in it and for what purposes the industry is preferring it.

1. Enhanced Cross-Modal Interaction: Mostly, the industry is preferring it for complex or coordinative tasks because its advanced attention mechanisms give different modalities the capability to make deeper connections with each other.

2. Unified Models: There are many such models available in the market which work on a unified model system, and tools like GPT-4 Vision and Google Gemini can handle multiple data types in one model.

3. Open Source and Collaboration: Although AI model building is generally quite confidential in the world, some projects are also kept open source, like "Hugging Face foster". Such projects promote AI research and democratize AI access.

4. Real-Time Multimodal Processing: These multimodal systems are extremely useful in AR, autonomous vehicles, and smart assistants.

5. Multimodal Data Augmentation: Synthetic datasets combine modalities and improve the processing of multimodal data.

Future of Multimodal AI

The future of multimodal AI is going to be much more promising and advanced. As computing power grows and data becomes more accessible, AI systems will give more context-aware results and their output will also become more reliable. It is going to play a very impactful role in sectors ranging from autonomous vehicles to personalized healthcare, and it has immense potential in this sector as it will positively impact the quality of life of humans.

Emerging technologies like edge AI and federated learning will also support multimodal capabilities, and they will grow while preserving user privacy. And eventually, our multimodal AI will redefine in the future how humans and machines can work together with more efficiency, and these intelligent systems will provide more inclusive results to the users.

Conclusion

Multimodal AI isn't just an upgrade to traditional AI; it is an important step towards making human civilization more advanced. Multimodal works by fusing multiple data types, which gives it a human-like understanding of the world. Whether it is revolutionizing healthcare or enhancing entertainment further, it is going to make everyday interactions of humans smoother, which will transform human life and industries a lot.

As we know that when advanced software models come, many challenges also come with it; hence, the responsibility of AI companies will increase a lot to make user data security systems more robust by taking privacy into account. And give priority to ethical results and avoid biased output by making guidelines.

FAQs

1. What is an example of multimodal AI?

2. How is multimodal AI different from traditional AI?

3. Is ChatGPT a multimodal AI?

4. What are the key technologies behind multimodal AI?

5. What industries will benefit most from multimodal AI?

Content writer at @AssignmentGPT

Ashu Singh, content writer at AssignmentGPT, crafting clear, engaging content that simplifies complex tech topics, with a focus on AI tools and digital platforms for empowered user experiences.

Master AI with

AssignmentGPT!

Get exclusive access to insider AI stories, tips and tricks. Sign up to the newsletter and be in the know!

Transform Your Studies with the Power of AssignmentGPT

Empower your academic pursuits with tools to enhance your learning speed and optimize your productivity, enabling you to excel in your studies with greater ease.

Start Your Free Trial ➤Start your success story with Assignment GPT! 🌟 Let's soar! 🚀

Step into the future of writing with our AI-powered platform. Start your free trial today and revolutionize your productivity, saving over 20 hours weekly.

Try For FREE ➤